ChatGPT can provide some spectacular effects, and in addition now and again some very deficient recommendation. However whilst it is unfastened to speak with ChatGPT in idea, steadily you find yourself with messages in regards to the gadget being at capability, or hitting your most collection of chats for the day, with a instructed to subscribe to ChatGPT Plus. Additionally, all your queries are going down on ChatGPT’s server, which means that that you want Web and that OpenAI can see what you are doing.

Thankfully, there are methods to run a ChatGPT-like LLM (Massive Language Fashion) in your native PC, the usage of the facility of your GPU. The oobabooga textual content era webui (opens in new tab) could be simply what you are after, so we ran some checks to determine what it would — and could not! — do, which means that we even have some benchmarks.

Getting the webui working wasn’t somewhat so simple as we had was hoping, partially because of how briskly the whole thing is transferring throughout the LLM area. There are the elemental directions within the readme, the one-click installers, after which a couple of guides for the best way to construct and run the LLaMa 4-bit fashions (opens in new tab). We encountered various levels of luck/failure, however with some assist from Nvidia and others, we after all were given issues running. After which the repository used to be up to date and our directions broke, however a workaround/repair used to be posted nowadays. Once more, it is transferring rapid!

It is like working Linux and most effective Linux, after which questioning the best way to play the most recent video games. Every now and then you’ll be able to get it running, different occasions you are offered with error messages and compiler warnings that you don’t have any thought the best way to clear up. We will supply our model of directions underneath for individuals who wish to give this a shot on their very own PCs. You might also to find some useful other people within the LMSys Discord (opens in new tab), who have been just right about serving to me with a few of my questions.

It could appear evident, however let’s additionally simply get this out of the way in which: You’ll be able to want a GPU with a large number of reminiscence, and most certainly a large number of gadget reminiscence as neatly, must you wish to have to run a big language mannequin by yourself {hardware} — it is proper there within the identify. A large number of the paintings to get issues working on a unmarried GPU (or a CPU) has desirous about lowering the reminiscence necessities.

The use of the bottom fashions with 16-bit information, as an example, the most productive you’ll be able to do with an RTX 4090, RTX 3090 Ti, RTX 3090, or Titan RTX — playing cards that each one have 24GB of VRAM — is to run the mannequin with seven billion parameters (LLaMa-7b). That is a get started, however only a few house customers are more likely to have this type of graphics card, and it runs somewhat poorly. Fortunately, there are different choices.

Loading the mannequin with 8-bit precision cuts the RAM necessities in part, which means it’s worthwhile to run LLaMa-7b with lots of the perfect graphics playing cards — anything else with no less than 10GB VRAM may probably suffice. Even higher, loading the mannequin with 4-bit precision halves the VRAM necessities over again, bearing in mind LLaMa-13b to paintings on 10GB VRAM. (You’ll be able to additionally want a respectable quantity of gadget reminiscence, 32GB or extra in all probability — that is what we used, no less than.)

Getting the fashions is not too tricky no less than, however they are able to be very huge. LLaMa-13b as an example is composed of 36.3 GiB obtain for the principle information (opens in new tab), after which any other 6.5 GiB for the pre-quantized 4-bit mannequin (opens in new tab). Do you have got a graphics card with 24GB of VRAM and 64GB of gadget reminiscence? Then the 30 billion parameter mannequin (opens in new tab) is most effective a 75.7 GiB obtain, and any other 15.7 GiB for the 4-bit stuff. There may be even a 65 billion parameter mannequin, when you have an Nvidia A100 40GB PCIe (opens in new tab) card to hand, together with 128GB of gadget reminiscence (neatly, 128GB of reminiscence plus change area). Expectantly the folks downloading those fashions shouldn’t have an information cap on their web connection.

Contents

Trying out Textual content Era Internet UI Efficiency

In idea, you’ll be able to get the textual content era internet UI working on Nvidia’s GPUs by means of CUDA, or AMD’s graphics playing cards by means of ROCm. The latter calls for working Linux, and after preventing with that stuff to do Strong Diffusion benchmarks previous this yr, I simply gave it a cross for now. When you have running directions on the best way to get it working (beneath Home windows 11, regardless that the usage of WSL2 is permitted) and you wish to have me to take a look at them, hit me up and I’m going to give it a shot. However for now I am sticking with Nvidia GPUs.

I encountered some amusing mistakes when looking to run the llama-13b-4bit fashions on older Turing structure playing cards just like the RTX 2080 Ti and Titan RTX. The whole thing gave the impression to load simply superb, and it might even spit out responses and provides a tokens-per-second stat, however the output used to be rubbish. Beginning with a recent surroundings whilst working a Turing GPU turns out to have labored, fastened the issue, so we’ve got 3 generations of Nvidia RTX GPUs.

Whilst in idea shall we take a look at working those fashions on non-RTX GPUs and playing cards with not up to 10GB of VRAM, we needed to make use of the llama-13b mannequin as that are supposed to give awesome effects to the 7b mannequin. Having a look on the Turing, Ampere, and Ada Lovelace structure playing cards with no less than 10GB of VRAM, that provides us 11 general GPUs to check. We felt that used to be higher than limiting issues to 24GB GPUs and the usage of the llama-30b mannequin.

For those checks, we used a Core i9-12900K working Home windows 11. You’ll see the entire specifications within the boxout. We used reference Founders Version fashions for many of the GPUs, regardless that there is not any FE for the 4070 Ti, 3080 12GB, or 3060, and we most effective have the Asus 3090 Ti.

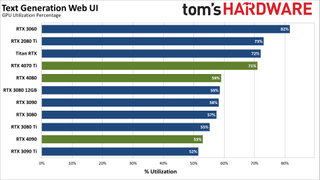

In idea, there must be an attractive huge distinction between the quickest and slowest GPUs in that record. In observe, no less than the usage of the code that we were given running, different bottlenecks are without a doubt an element. It is not transparent whether or not we are hitting VRAM latency limits, CPU boundaries, or one thing else — most certainly a mixture of things — however your CPU without a doubt performs a task. We examined an RTX 4090 on a Core i9-9900K and the 12900K, as an example, and the latter used to be nearly two times as rapid.

It seems like one of the crucial paintings no less than finally ends up being essentially single-threaded CPU restricted. That might provide an explanation for the massive development in going from 9900K to 12900K. Nonetheless, we would love to look scaling well past what we have been ready to reach with those preliminary checks.

Given the speed of alternate taking place with the study, fashions, and interfaces, it is a secure wager that we will see numerous development within the coming days. So, do not take those efficiency metrics as anything else greater than a snapshot in time. We would possibly revisit the checking out at a long run date, expectantly with further checks on non-Nvidia GPUs.

We ran oobabooga’s internet UI with the next, for reference. Extra on how to do that underneath.

python server.py --gptq-bits 4 --model llama-13bTextual content Era Internet UI Benchmarks (Home windows)

Once more, we wish to preface the charts underneath with the next disclaimer: Those effects do not essentially make a ton of sense if we take into consideration the normal scaling of GPU workloads. Usually you find yourself both GPU compute constrained, or restricted by way of GPU reminiscence bandwidth, or some mixture of the 2. There are without a doubt different elements at play with this actual AI workload, and we’ve got some further charts to assist provide an explanation for issues a bit of.

Operating on Home windows is most likely an element as neatly, however taking into consideration 95% of persons are most likely working Home windows in comparison to Linux, that is additional information on what to anticipate at the moment. We needed checks that shall we run with no need to take care of Linux, and clearly those initial effects are extra of a snapshot in time of ways issues are working than a last verdict. Please take it as such.

Those preliminary Home windows effects are extra of a snapshot in time than a last verdict.

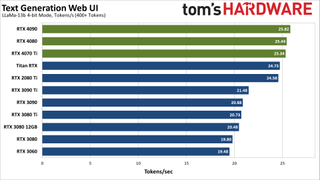

We ran the take a look at instructed 30 occasions on each and every GPU, with a most of 500 tokens. We discarded any effects that had fewer than 400 tokens (as a result of the ones do much less paintings), and in addition discarded the primary two runs (warming up the GPU and reminiscence). Then we looked after the consequences by way of velocity and took the typical of the rest ten quickest effects.

In most cases talking, the velocity of reaction on any given GPU used to be beautiful constant, inside a 7% vary at maximum at the examined GPUs, and steadily inside a three% vary. That is on one PC, alternatively; on a distinct PC with a Core i9-9900K and an RTX 4090, our efficiency used to be round 40 p.c slower than at the 12900K.

Our instructed for the next charts used to be: “How a lot computational energy does it take to simulate the human mind?”

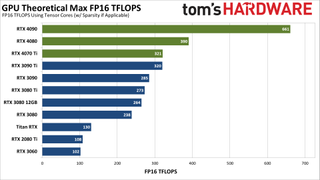

Our quickest GPU used to be certainly the RTX 4090, however… it is not actually that a lot sooner than different choices. Bearing in mind it has kind of two times the compute, two times the reminiscence, and two times the reminiscence bandwidth because the RTX 4070 Ti, you’ll be expecting greater than a 2% development in efficiency. That did not occur, no longer even shut.

The location with RTX 30-series playing cards is not all that other. The RTX 3090 Ti comes out because the quickest Ampere GPU for those AI Textual content Era checks, however there may be nearly no distinction between it and the slowest Ampere GPU, the RTX 3060, taking into consideration their specs. A ten% merit is hardly ever value talking of!

After which take a look at the 2 Turing playing cards, which in truth landed upper up the charts than the Ampere GPUs. That merely mustn’t occur if we have been coping with GPU compute restricted situations. Possibly the present instrument is solely higher optimized for Turing, perhaps it is one thing in Home windows or the CUDA variations we used, or perhaps it is one thing else. It is bizarre, is actually all I will be able to say.

Those effects should not be taken as an indication that everybody concerned with getting considering AI LLMs must run out and purchase RTX 3060 or RTX 4070 Ti playing cards, or specifically previous Turing GPUs. We propose the complete opposite, because the playing cards with 24GB of VRAM are ready to take care of extra advanced fashions, which may end up in higher effects. Or even probably the most robust shopper {hardware} nonetheless pales compared to information middle {hardware} — Nvidia’s A100 can also be had with 40GB or 80GB of HBM2e, whilst the more moderen H100 defaults to 80GB. I indubitably may not be surprised if in the end we see an H100 with 160GB of reminiscence, regardless that Nvidia hasn’t mentioned it is in truth running on that.

For instance, the 4090 (and different 24GB playing cards) can all run the LLaMa-30b 4-bit mannequin, while the ten–12 GB playing cards are at their restrict with the 13b mannequin. 165b fashions additionally exist, which will require no less than 80GB of VRAM and most certainly extra, plus gobs of gadget reminiscence. And that’s the reason only for inference; coaching workloads require much more reminiscence!

“Tokens” for reference is principally the similar as “phrases,” with the exception of it will possibly come with issues that don’t seem to be strictly phrases, like portions of a URL or method. So after we give a results of 25 tokens/s, that is like anyone typing at about 1,500 phrases in line with minute. That is beautiful darn rapid, regardless that clearly if you are making an attempt to run queries from a couple of customers that may briefly really feel insufficient.

Here is a other take a look at the more than a few GPUs, the usage of most effective the theoretical FP16 compute efficiency. Now, we are in truth the usage of 4-bit integer inference at the Textual content Era workloads, however integer operation compute (Teraops or TOPS) must scale in a similar way to the FP16 numbers. Additionally word that the Ada Lovelace playing cards have double the theoretical compute when the usage of FP8 as an alternative of FP16, however that is not an element right here.

If there are inefficiencies within the present Textual content Era code, the ones will most certainly get labored out within the coming months, at which level shall we see extra like double the efficiency from the 4090 in comparison to the 4070 Ti, which in flip can be kind of triple the efficiency of the RTX 3060. We will have to attend and spot how those initiatives increase over the years.

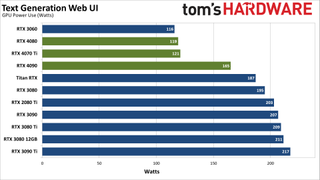

Those ultimate two charts are simply as an instance that the present effects will not be indicative of what we will be able to be expecting sooner or later. Operating Strong-Diffusion as an example, the RTX 4070 Ti hits 99–100% GPU usage and consumes round 240W, whilst the RTX 4090 just about doubles that — with double the efficiency as neatly.

With Oobabooga Textual content Era, we see normally upper GPU usage the decrease down the product stack we cross, which does make sense: Extra robust GPUs may not wish to paintings as arduous if the bottleneck lies with the CPU or any other part. Energy use alternatively does not at all times align with what we would be expecting. RTX 3060 being the bottom energy use is sensible. The 4080 the usage of much less energy than the (customized) 4070 Ti alternatively, or Titan RTX eating much less energy than the 2080 Ti, merely display that there is extra happening in the back of the scenes.

Longer term, we predict the more than a few chatbots — or no matter you wish to have to name those “lite” ChatGPT stories — to strengthen considerably. They will get sooner, generate higher effects, and make higher use of the to be had {hardware}. Now, let’s speak about what kind of interactions you’ll be able to have with text-generation-webui.

Chatting With Textual content Era Internet UI

The Textual content Era undertaking does not make any claims of being anything else like ChatGPT, and neatly it mustn’t. ChatGPT will no less than try to write poetry, tales, and different content material. In its default mode, TextGen working the LLaMa-13b mannequin feels extra like asking a actually sluggish Google to supply textual content summaries of a query. However the context can alternate the enjoy somewhat so much.

Most of the responses to our question about simulating a human mind seem to be from boards, Usenet, Quora, or more than a few different web pages, even if they are no longer. That is form of humorous while you take into consideration it. You ask the mannequin a query, it makes a decision it seems like a Quora query, and thus mimics a Quora resolution — or no less than that is our figuring out. It nonetheless feels peculiar when it places in such things as “Jason, age 17” after some textual content, when it sounds as if there is not any Jason asking this type of query.

Once more, ChatGPT this isn’t. However you’ll be able to run it in a distinct mode than the default. Passing “–cai-chat” as an example will provide you with a changed interface and an instance personality to talk with, Chiharu Yamada. And in the event you like moderately brief responses that sound a bit of like they arrive from an adolescent, the chat may cross muster. It simply may not supply a lot in the way in which of deeper dialog, no less than in my enjoy.

In all probability you’ll be able to give it a greater personality or instructed; there are examples available in the market. There are many different LLMs as neatly; LLaMa used to be simply our selection for buying those preliminary take a look at effects carried out. It’s good to most certainly even configure the instrument to reply to other people on the net, and because it is not in truth “studying” — there is not any coaching going down at the present fashions you run — you’ll be able to relaxation confident that it may not all at once change into Microsoft’s Tay Twitter bot after 4chan and the web get started interacting with it. Simply do not be expecting it to put in writing coherent essays for you.

Getting Textual content-Era-Webui to Run (on Nvidia)

Given the directions at the undertaking’s primary web page (opens in new tab), you’ll suppose getting this up and working can be beautiful easy. I am right here to let you know that it is not, no less than at the moment, particularly if you wish to use one of the crucial extra attention-grabbing fashions. However it can be carried out. The bottom directions as an example let you know to make use of Miniconda on Home windows. When you practice the directions, you can most likely finally end up with a CUDA error. Oops.

This extra detailed set of directions off Reddit (opens in new tab) must paintings, no less than for loading in 8-bit mode. The primary factor with CUDA will get coated in steps 7 and eight, the place you obtain a CUDA DLL and replica it right into a folder, then tweak a couple of traces of code. Obtain an acceptable mannequin and also you must expectantly be just right to move. The 4-bit directions utterly failed for me the primary occasions I attempted them (replace: they appear to paintings now, regardless that they are the usage of a distinct model of CUDA than our directions). lxe has those choice directions (opens in new tab), which additionally did not somewhat paintings for me.

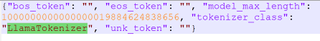

I were given the whole thing running in the end, with some assist from Nvidia and others. The directions I used are underneath… however then issues stopped running on March 16, 2023, because the LLaMaTokenizer spelling used to be modified to “LlamaTokenizer” and the code failed. Fortunately that used to be a moderately simple repair. However what is going to destroy subsequent, after which get fastened an afternoon or two later? We will most effective wager, however as of March 18, 2023, those directions labored on a number of other take a look at PCs.

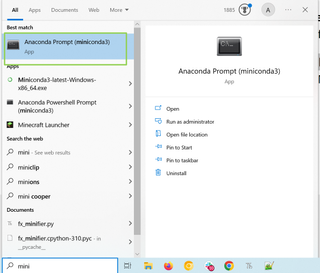

1. Set up Miniconda for Home windows the usage of the default choices. The highest “Miniconda3 Home windows 64-bit” hyperlink must be the suitable one to obtain.

2. Obtain and set up Visible Studio 2019 Construct Equipment (opens in new tab). Handiest choose “Desktop Surroundings with C++” when putting in. Model 16.11.25 from March 14, 2023, construct 16.11.33423.256 must paintings.

3. Create a folder for the place you will put the undertaking information and fashions., e.g. C:AIStuff.

4. Release Miniconda3 instructed. You’ll to find it by way of looking out Home windows for it or at the Get started Menu.

5. Run this command, together with the quotes round it. It units the VC construct surroundings so CL.exe can also be discovered, calls for Visible Studio Construct Equipment from step 2.

"C:Program Recordsdata (x86)Microsoft Visible Studio2019BuildToolsVCAuxiliaryBuildvcvars64.bat"6. Input the next instructions, one by one. Input “y” if precipitated to continue after any of those.

conda create -n llama4bit

conda turn on llama4bit

conda set up python=3.10

conda set up git7. Transfer to the folder (e.g. C:AIStuff) the place you wish to have the undertaking information.

cd C:AIStuff8. Clone the textual content era UI with git.

git clone https://github.com/oobabooga/text-generation-webui.git9. Input the text-generation-webui folder, create a repositories folder beneath it, and alternate to it.

cd text-generation-webui

md repositories

cd repositories10. Git clone GPTQ-for-LLaMa.git after which transfer up one listing.

git clone https://github.com/qwopqwop200/GPTQ-for-LLaMa.git

cd ..11. Input the next command to put in a number of required programs which can be used to construct and run the undertaking. This will take a little time to finish, now and again it mistakes out. Run it once more if important, it’s going to select up the place it left off.

pip set up -r necessities.txt12. Use this command to put in extra required dependencies. We are the usage of CUDA 11.7.0 right here, regardless that different variations would possibly paintings as neatly.

conda set up cuda pytorch torchvision torchaudio pytorch-cuda=11.7 -c pytorch -c nvidia/label/cuda-11.7.013. Take a look at to look if CUDA Torch is correctly put in. This must go back “True” at the subsequent line. If this fails, repeat step 12; if it nonetheless fails and you have got an Nvidia card, publish a word within the feedback.

python -c "import torch; print(torch.cuda.is_available())"14. Set up ninja and chardet. Press y if precipitated.

conda set up ninja

pip set up cchardet chardet15. Alternate to the GPTQ-for-LLama listing.

cd repositoriesGPTQ-for-LLaMa16. Arrange the surroundings for compiling the code.

set DISTUTILS_USE_SDK=117. Input the next command. This generates a LOT of warnings and/or notes, regardless that it nonetheless compiles ok. It could take a bit of to finish.

python setup_cuda.py set up18. Go back to the text-generation-webui folder.

cd C:AIStufftext-generation-webui19. Obtain the mannequin. It is a 12.5GB obtain and will take a bit of, relying in your connection velocity. Now we have specified the llama-7b-hf model, which must run on any RTX graphics card. When you have a card with no less than 10GB of VRAM, you’ll be able to use llama-13b-hf as an alternative (and it is about 3 times as huge at 36.3GB).

python download-model.py decapoda-research/llama-7b-hf20. Rename the mannequin folder. If you are doing the bigger mannequin, simply exchange 7b with 13b.

rename modelsllama-7b-hf llama-7b21. Obtain the 4-bit pre-quantized mannequin from Hugging Face, “llama-7b-4bit.pt” and position it within the “fashions” folder (subsequent to the “llama-7b” folder from the former two steps, e.g. “C:AIStufftext-generation-webuimodels”). There are 13b and 30b fashions as neatly, regardless that the latter calls for a 24GB graphics card and 64GB of gadget reminiscence to paintings.

22. Edit the tokenizer_config.json record within the text-generation-webuimodelsllama-7b folder and alternate LLaMATokenizer to LlamaTokenizer. The capitalization is what issues.

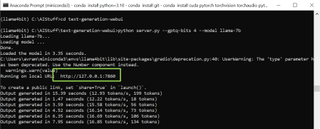

23. Input the next command from throughout the C:AIStufftext-generation-webui folder. (Change llama-7b with llama-13b if that is what you downloaded; many different fashions exist and would possibly generate higher, or no less than other, effects.)

python server.py --gptq-bits 4 --model llama-7bYou’ll be able to now get an IP cope with that you’ll be able to discuss with on your internet browser. The default is http://127.0.0.1:7860 (opens in new tab), regardless that it’s going to seek for an open port if 7860 is in use (i.e. by way of Strong-Diffusion).

24. Navigate to the URL in a browser.

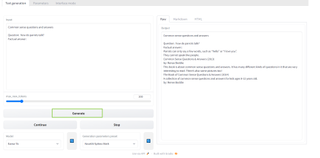

25. Check out getting into your activates within the “enter field” and click on Generate.

26. Mess around with the instructed and check out different choices, and check out to have amusing — you will have earned it!

If one thing did not paintings at this level, test the command instructed for error messages, or hit us up within the feedback. Possibly simply take a look at exiting the Miniconda command instructed and restarting it, turn on the surroundings, and alter to the best folder (steps 4, 6 (most effective the “conda turn on llama4bit” section), 18, and 23).

Once more, I am additionally all for what it’s going to take to get this running on AMD and Intel GPUs. When you have running directions for the ones, drop me a line and I’m going to see about checking out them. Preferably, the answer must use Intel’s matrix cores; for AMD, the AI cores overlap the shader cores however would possibly nonetheless be sooner total.

Supply Through https://www.tomshardware.com/information/running-your-own-chatbot-on-a-single-gpu