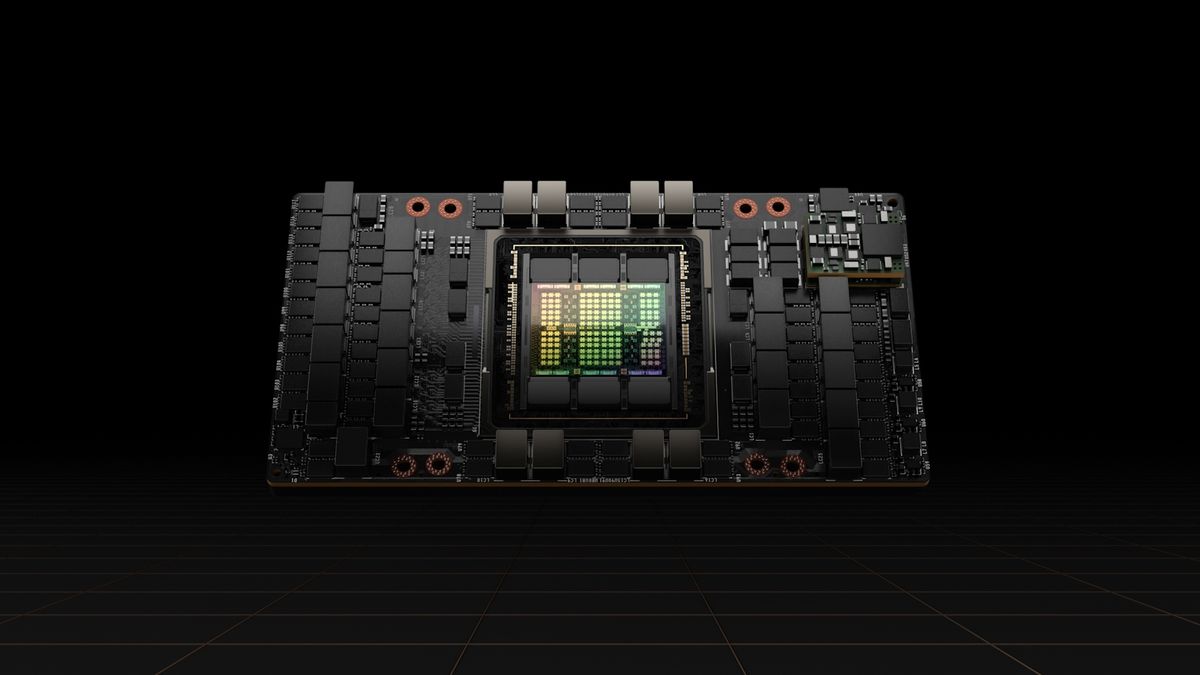

You will not in finding Nvidia’s H100 (Hopper) GPU at the checklist of the most efficient graphics playing cards. Then again, the H100’s strong point lies in synthetic intelligence (AI), making it a coveted GPU within the AI trade. And now that everybody is leaping at the AI bandwagon, Nvidia’s H100 has transform much more common.

Nvidia claims that the H100 delivers as much as 9X sooner AI coaching efficiency and as much as 30X speedier inference efficiency than the former A100 (Ampere). With a efficiency of that degree, it is simple to realize why everybody needs to get their arms on an H100. As well as, Reuters (opens in new tab) reported that Nvidia had changed the H100 to agree to export regulations in order that the chipmaker may promote the altered H100 because the H800 to China.

Final 12 months, U.S. officers applied a number of rules to stop Nvidia from promoting its A100 and H100 GPUs to Chinese language shoppers. The foundations restricted GPU exports with chip-to-chip knowledge switch charges underneath 600 GBps. Switch velocity is primordial within the AI international, the place methods have to transport monumental quantities of knowledge round to coach the AI fashions, corresponding to ChatGPT. Hindering the chip-to-chip knowledge switch charge ends up in a vital efficiency hit, because the slower switch charges build up the time it takes to switch knowledge, in flip expanding the learning time.

With the A100, Nvidia trimmed the GPU’s 600 GBps interconnect right down to 400 GBps and rebranded it because the A800 to commercialize it within the Chinese language marketplace. Nvidia is taking an similar method to the H100.

Consistent with Reuters’ Chinese language chip trade supply, Nvidia diminished the chip-to-chip knowledge switch charge at the H800 to roughly part of the H100. That would depart the H800 with an interconnect limited to 300 GBps. That is a extra vital efficiency hit than in comparison to the A100 and A800, the place the latter suffered from a 33% decrease chip-to-chip knowledge switch charge. Then again, the H100 is considerably sooner than the A100, which may well be why Nvidia imposed a extra serious chip-to-chip knowledge switch charge prohibit at the former.

Reuters contacted an Nvidia spokesperson to inquire about what differentiates the H800 from the H100. Then again, the Nvidia consultant best said that “our 800-series merchandise are totally compliant with export regulate rules.”

Nvidia already has 3 of essentially the most distinguished Chinese language generation firms the usage of the H800: Alibaba Staff Conserving, Baidu Inc, and Tencent Holdings. China has banned ChatGPT; subsequently, the tech giants are competing with each and every different to supply a home ChatGPT-like type for the Chinese language marketplace. And whilst an H800 with part the chip-to-chip switch charge will indisputably be slower than the full-fat H100, it is going to nonetheless no longer be gradual. With firms doubtlessly the usage of 1000’s of Hopper GPUs, in the end, we need to ponder whether this may imply the usage of extra H800s to perform the similar paintings as fewer H100s.

Supply Through https://www.tomshardware.com/information/nvidia-gimps-h100-hopper-gpu-to-sell-as-h800-to-china