Nvidia simply printed some new functionality numbers for its H100 compute GPU in MLPerf 3.0, the newest model of a distinguished benchmark for deep finding out workloads. The Hopper H100 processor now not simplest surpasses its predecessor A100 in time-to-train measurements, however it is gaining functionality due to device optimizations. As well as, Nvidia additionally printed early functionality comparisons of its compact L4 compact compute GPU to its predecessor, the T4 GPU.

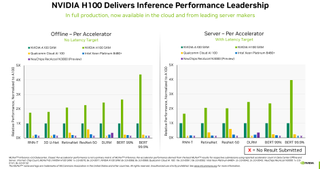

Nvidia first printed H100 check effects acquired within the MLPerf 2.1 benchmark again in September 2022, revealing that its flagship compute GPU can beat its predecessor A100 by way of as much as 4.3–4.4 instances in more than a few inference workloads. The newly launched functionality numbers acquired in MLPerf 3.0 now not simplest ascertain that Nvidia’s H100 is quicker than its A100 (no marvel), however reaffirms that it’s also tangibly sooner than Intel’s just lately launched Xeon Platinum 8480+ (Sapphire Rapids) processor in addition to NeuChips’s ReccAccel N3000 and Qualcomm’s Cloud AI 100 answers in a number of workloads

Those workloads come with symbol classification (ResNet 50 v1.5), herbal language processing (BERT Huge), speech reputation (RNN-T), clinical imaging (3-d U-Web), object detection (RetinaNet), and advice (DLRM). Nvidia makes the purpose that now not simplest are its GPUs sooner, however they’ve higher improve around the ML business — one of the most workloads failed on competing answers.

There’s a catch with the numbers printed by way of Nvidia even though. Distributors give you the chance to put up their MLPerf ends up in two classes: closed and open. Within the closed class, all distributors should run mathematically identical neural networks, while within the open class they are able to adjust the networks to optimize their functionality for his or her {hardware}. Nvidia’s numbers simplest replicate the closed class, so optimizations Intel or different distributors can introduce to optimize functionality in their {hardware} don’t seem to be mirrored in those crew effects.

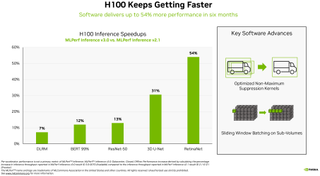

Tool optimizations can deliver massive advantages to fashionable AI {hardware}, as Nvidia’s personal instance presentations. The corporate’s H100 won anyplace from 7% in advice workloads to 54% in object detection workloads with in MLPerf 3.0 vs MLPerf 2.1, which is a sizeable functionality uplift.

Referencing the explosion of ChatGPT and equivalent products and services, Dave Salvator, Director of AI, Benchmarking and Cloud, at Nvidia, writes in a weblog put up: “At this iPhone second of AI, functionality on inference is necessary… Deep finding out is now being deployed just about all over the place, riding an insatiable want for inference functionality from manufacturing unit flooring to on-line advice methods.”

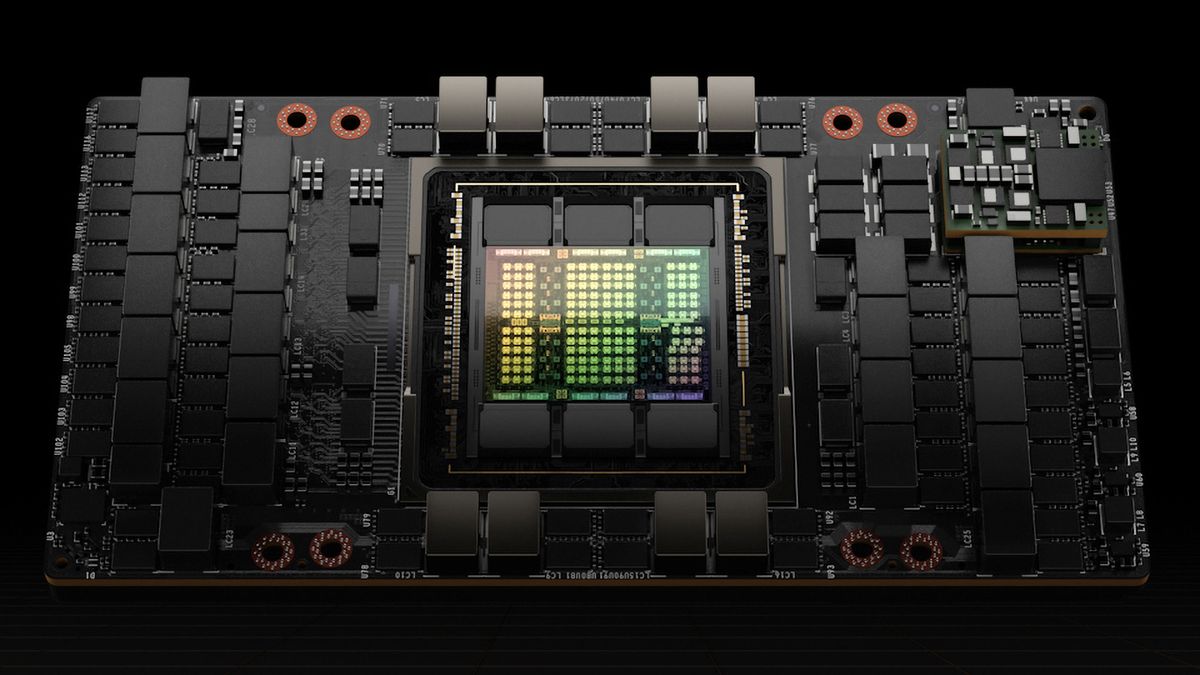

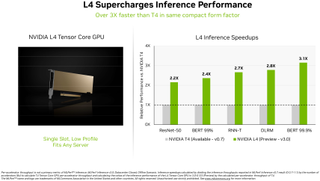

Along with reaffirming that its H100 is inference functionality king in MLPerf 3.0, the corporate additionally gave a sneak peek on functionality of its just lately launched AD104-based L4 compute GPU (opens in new tab). This Ada Lovelace-powered compute GPU card is available in a single-slot low-profile form-factor to suit into any server, but it delivers relatively bold functionality: as much as 30.3 FP32 TFLOPS for common compute and as much as 485 FP8 TFLOPS (with sparsity).

Nvidia simplest in comparison its L4 to one among its different compact datacenter GPUs, the T4. The latter is in keeping with the TU104 GPU that includes the Turing structure from 2018, so it is infrequently sudden that the brand new GPU is two.2–3.1 instances sooner than the predecessor in MLPerf 3.0, relying at the workload.

“Along with stellar AI functionality, L4 GPUs ship as much as 10x sooner symbol decode, as much as 3.2x sooner video processing, and over 4x sooner graphics and real-time rendering functionality,” Salvator wrote.

No doubt, the benchmark result of Nvidia’s H100 and L4 compute GPUs — that are already presented by way of main methods makers and cloud provider suppliers — glance spectacular. Nonetheless, take into account that we’re coping with benchmark numbers printed by way of Nvidia itself quite than impartial assessments.

Supply Through https://www.tomshardware.com/information/nvidia-publishes-mlperf-30-performance-of-h100-l4